> be me > installed VScode to test whether language server is just unfriendly with KATE > get bombarded with "try our AI!" type BS > vomit.jpg > managed to test it, but the AI turns me off > immediately uninstalled this piece of glorified webpage from my ThinkPadIt seems I’m having to do more jobs with KATE. (Does the LSP plugin for KATE handle stuff differently from the standard in some known way?)

The world is healing

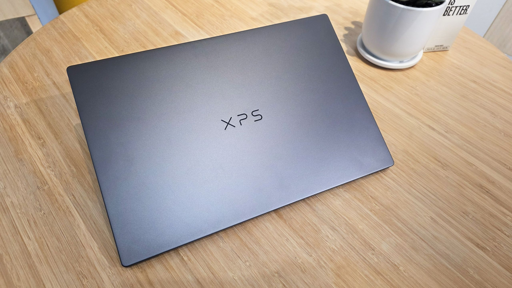

I actually do care about AI PCs. I care in the sense that it is something I want to actively avoid.

It doesn’t confuse us… it annoys us with the blatant wrong information. e.g. glue is a pizza ingredient.

That’s when you use 3 years old models

Are you trying to make us believe that AI doesn’t hallucinate?

It doesn’t, it generates incorrect information. This is because AI doesn’t think or dream, it’s a generative technology that outputs information based on whatever went in. It can’t hallucinate because it can’t think or feel.

Hallucinate is the word that has been assigned to what you described. When you don’t assign additional emotional baggage to the word, hallucinate is a reasonable word to pick to decribe when an llm follows a chain of words that have internal correlation but no basis in external reality.

Trying to isolate out “emotional baggage” is not how language works. A term means something and applies somewhere. Generative models do not have the capacity to hallucinate. If you need to apply a human term to a non-human technology that pretends to be human, you might want to use the term “confabulate” because hallucination is a response to stimulus while confabulation is, in simple terms, bullshitting.

A term means something and applies somewhere.

Words are redefined all the time. Kilo should mean 1000. It was the international standard definition for 150 years. But now with computers it means 1024.

Confabulation would have been a better choice. But people have chosen hallucinate.

Doesn’t confuse me, just pisses me off trying to do things I don’t need or want done. Creates problems to find solutions to

Can the NPU at least stand in as a GPU in case you need it?

No as it doesn’t compute graphical information and is solely for running computations for “AI stuff”.

Nope. Don’t need it

Holy crap that Recall app that “works by taking screenshots” sounds like such a waste of resources. How often would you even need that?

Virtually everything described in this article already exists in some way…

It’s such a stupid approach to the stated problem that I just assumed it was actually meant for something else and the stated problem was to justify it. And made the decision to never use win 11 on a personal machine based on this “feature”.

What people don’t want is blackbox AI agents installed system-wide that use the carrot of “integration and efficiency” to justify bulk data collection, that the end user implicitly agrees to by logging into the OS.

God forbid people want the compute they are paying for to actually do what they want, and not work at cross purposes for the company and its various data sales clients.

Unveiling: the APU!!! (ad processing unit)

You spelled “pisses them off” wrong

Stolen from BSKY

That is gold